Object-Oriented, Vector-Native, Enterprise-Ready:

Insights for the Next Generation of AI

Enterprises have treated size as a measure of intelligence, but Large Language Models come with cost, risk, and dependency. Small Language Models, deployed through FlexVertex’s pluggable substrate, restore control—enabling affordable, private, domain-tuned intelligence at the edge and beyond.

Most enterprises are unknowingly building their AI future inside someone else’s walls. When models or APIs change, their innovation stalls. FlexVertex prevents that dependence by making intelligence pluggable and portable—so organizations keep control of their data, costs, and direction, no matter how the AI landscape shifts.

Relational joins, essentially unchanged since the 1970s, remain the weak link in AI pipelines. They fracture context, add latency, and force brittle schemas. FlexVertex replaces joins with native connections, inheritance, and integrated embeddings. For enterprises, that means models trained on richer context, inferences drawn from complete structures, and outcomes that are explainable and trustworthy. The future of AI doesn’t live in join tables—it lives in connected meaning.

AI workloads don’t fit neatly into documents, graphs, or vector embeddings alone. They require all three, working in concert. Traditional platforms split these into silos, slowing development and weakening outcomes. FlexVertex unifies them natively, letting enterprises train, infer, and act on a complete context. For recommendation systems, copilots, and intelligent applications, this means faster cycles, sharper insights, and more reliable AI. FlexVertex provides the integrated substrate that modern AI demands.

Centralized GPU farms are not enough. FlexVertex enables vector-native AI to run consistently at the edge — on lightweight, embedded devices with full support for search, inheritance, hybrid queries, and governance. Whether in defense, healthcare, or industrial IoT, this approach ensures low-latency reasoning, privacy, and bandwidth savings without sacrificing functionality. The future of AI is distributed, and the edge must be as intelligent as the core.

Not all data is created equal. Proprietary IP, patient files, and defense records are the crown jewels—and they can’t leave the castle. FlexVertex ensures sovereignty by letting enterprises run AI on their terms, across any environment, without ever surrendering control of their most valuable assets.

Flat embeddings are brittle arrays that fail under enterprise demands. FlexVertex makes vectors object-oriented: structured bundles with inheritance, governance, and context. The result is scalable, explainable AI infrastructure that integrates seamlessly with data models, eliminates fragile hacks, and future-proofs enterprise systems against compliance, governance, and scalability challenges.

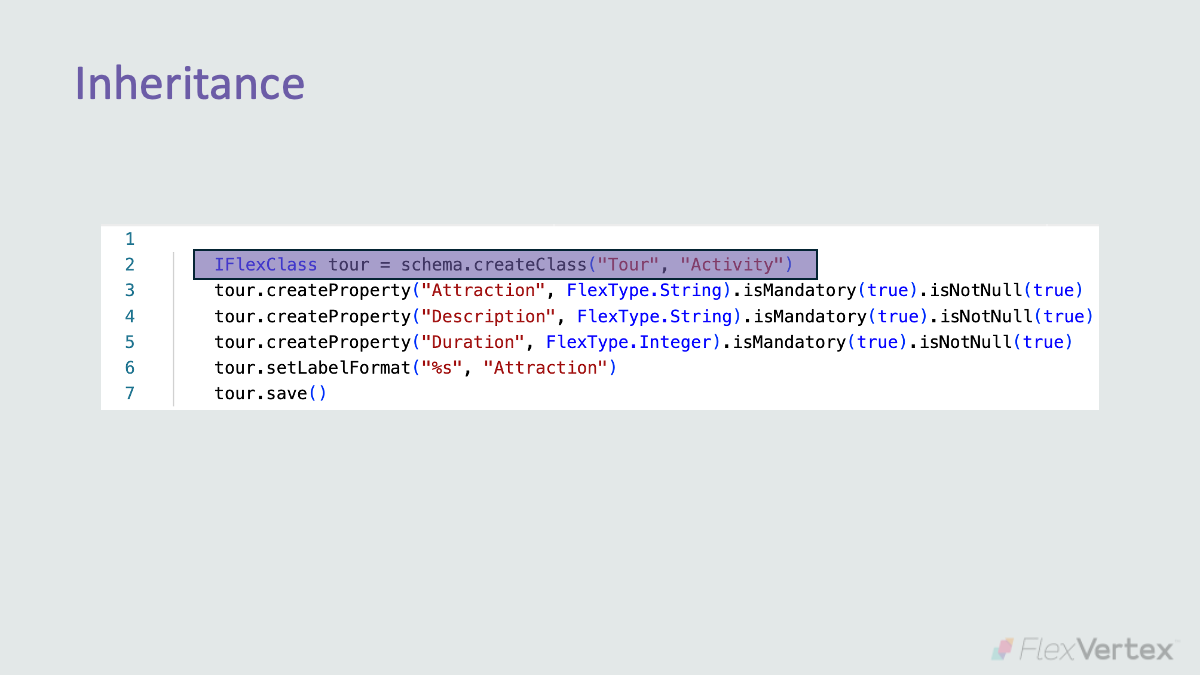

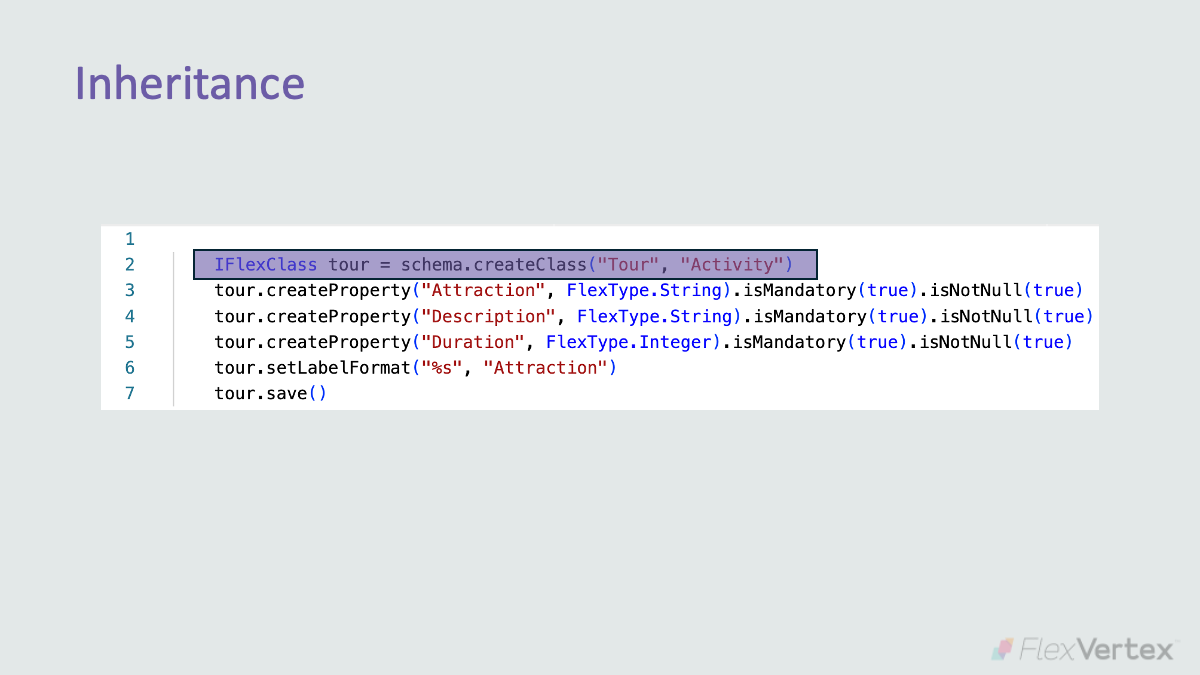

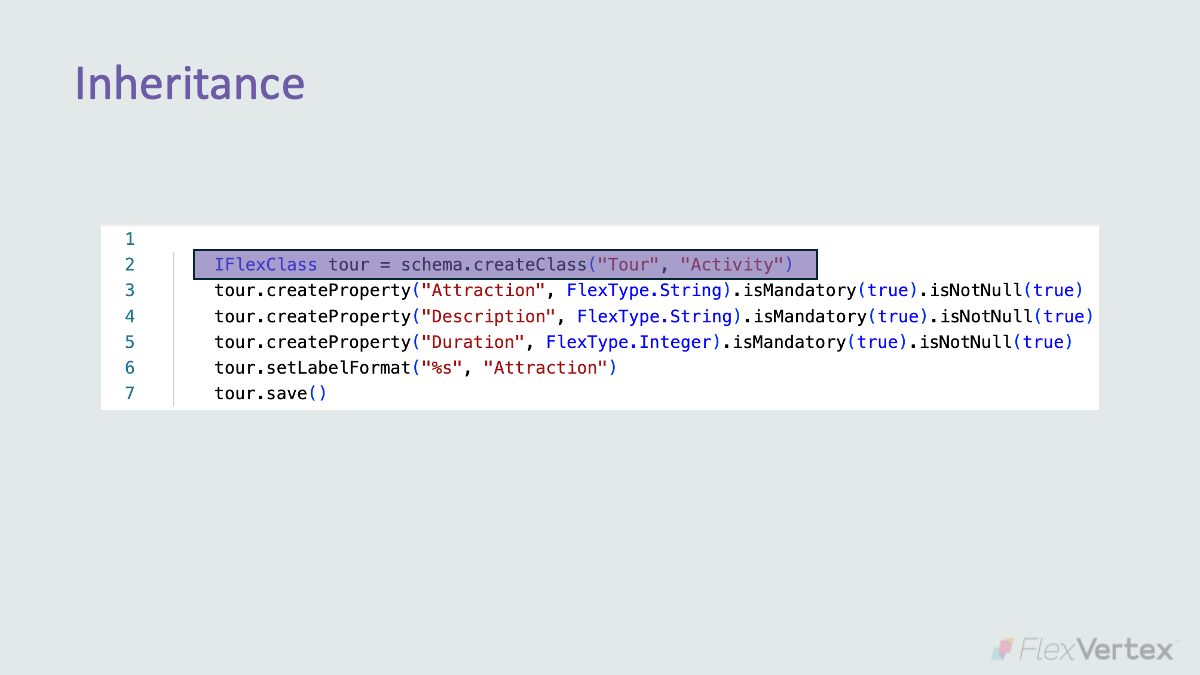

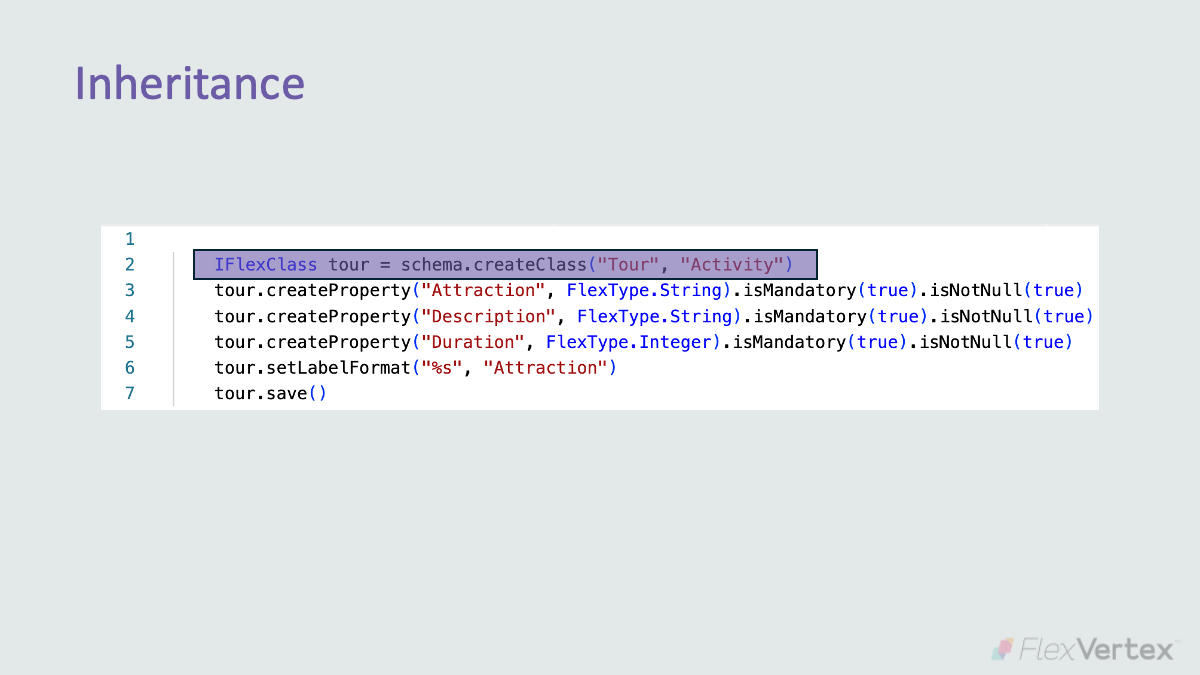

Governments and enterprises don’t operate on flat lists—they rely on layered hierarchies. FlexVertex embeds hierarchy directly into AI infrastructure through inheritance, ensuring policies cascade correctly, governance is enforced, and exceptions are preserved. The result is AI systems that reflect organizational reality, eliminating brittle workarounds and technical debt.

Many teams bolt vector search onto databases as an afterthought. It looks fast, but it builds fragility and technical debt. FlexVertex embeds vectors natively, as first-class objects with governance and security built in. The result is AI infrastructure that scales cleanly, without brittle patches or costly rewrites.

Traditional databases reduce embeddings to raw number arrays—opaque, fragile, and detached from context. FlexVertex redefines them as structured, inheritable objects with lineage and governance. The outcome: enterprise-ready AI infrastructure that adapts naturally, preserves meaning, and scales with evolving demands.

AI systems often isolate embeddings, documents, and people, leaving results shallow and unreliable. FlexVertex unifies these elements, preserving the relationships that matter. The outcome is AI infrastructure built for trust—explainable, contextual, and transparent—designed to scale smoothly with the full complexity of enterprise operations.

Enterprises rely on PDFs, videos, and media, yet most systems push them to the margins—disconnected and hard to manage. FlexVertex integrates assets as core objects within the database itself. The outcome: a governed, discoverable AI substrate where assets remain connected, contextual, and fully embedded in enterprise workflows.

Most query languages weren’t built for AI. They flatten data into static records, requiring glue code for relationships. Voyager enables native traversal across embeddings, documents, and objects, preserving context. The result is faster insights, lower technical debt, and AI results that enterprises can actually trust.